Not some mere addition

This has absolutely nothing to do with computers, technology or programming, but I found it interesting as an exercise in shifting perspectives regardless.

In philosophy there is a somewhat famous problem called, depending on who you talk to, either the “Repugnant conclusion” or the “Mere addition paradox”. It considers what happens when your morality has to take into account all the people who could be alive and what kind of world would be the best to live in.

Basically the idea is simple. Consider a world, A, in which there is a group of people who all have exactly the same happiness. Perhaps this is a communist utopia that actually worked or perhaps this is a world with just one band of human hunter gathers; either way life is pleasant, humans live long lives with fulfilling friendships and all that jazz.

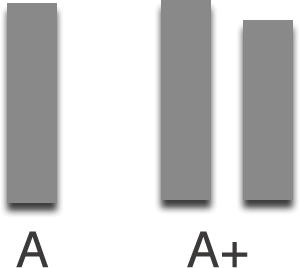

Now lets add another world, A+, that consists of two populations: the first one being exactly the same as the one in A and the second group with the same population size, but a slightly lower happiness. If you live in that group you might have as good a life as the other group, but have a less fulfilling love life. You can see both worlds side by side in figure 1.

Figure 1: Two worlds, A and A+

Living in world A+ cannot be worse than living in world A, because either you belong to the group that has the high happiness level, in which case life is exactly like it was before, or you live in the new group that has a slightly lower happiness level, but you are at least alive and your life is definitely worth living. The average happiness may be lower than in world A, but the total sum of happiness is significantly larger.

So far, not so repugnant, but what if we consider a third world? It has exactly as much happiness as world A+, but spread out over a far larger population.

Of course this means that it has a shitty average level of happiness – while it is positive (ie the people involved would rather be alive than death), it is just barely that. This world is called B.

Figure 2: A world with a large number of people living a life barely worth living.

Remember that we concluded A+ would be at least as good a world to live in in as A, because although the average happiness went down, the total amount of happiness was at least constant and a lot of new people got a chance to live?

Well since the total sum of happiness in world B is the same as in world A+ and we added a lot of people, world B has to be at least as good as world A, right?

And that is the Repugnant conclusion: that for any given world we should add more and more people until the standard of living is such that everybody lives lives that are barely worth living.

Philosophers may be mostly useless, but they are good at naming because that truly is repugnant to consider. It also seems fundamentally flawed, because you definitely don’t consider world B to be better to live in.

If you get to choose which world to live in, world A is the best, world A+ a close second (world A+ is equally as good if you get to choose to which subgroup you want to live in) and world B a very distant third. This is exactly what our intuitions suggest and so not very paradoxical.

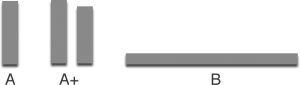

It is not very paradoxical because your intuitions are assuming that you get to live in the first place. If we assume that world A could hold 4000 people and that A+ could hold 8000 with world B holding 80000 and that the number of people that could exist was 100000 thousands, with you just being 1 of them, which world would you choose?

Not A, it would be nice to live in but there is less than 5% chance that you get to live at all in that case. A+? Well it is almost as nice as A and you get to have 8% chance of living there. World B? Well it beats the pants of the big empty nothingness and you have a whopping 80% chance of living there.

Looking at it now, world B doesn’t seem nearly as bad, does it?

Figure 3: all the worlds, side by side.

In philosophy that is called the “Weil of Ignorance”: since you do not know which position in a society you will take, you are going to try to get the society to be more just overall. You don’t want to create a society with a lot of slaves if you end up being a slave yourself.

There is a more extreme version of Weil of Ignorance: rather than not knowing your station in life, you know for certain exactly what position you will have: at the bottom of society. In this case that is even worse: unless the world is big enough for everybody, you don’t get live at all.

In this case, we will have to add a fourth world B+ that is big enough so that everybody can be born, but where the lives are even closer to not being worth living than in world B. Given that you are always going to end up on the bottom, you would choose that world simply so that you get a chance to live at all.